AI failures aren’t just hilarious mishaps—they’re valuable glimpses into how these systems actually work. From generating bizarre images to giving confidently wrong answers, these failures reveal the limitations of current AI technology while hinting at both challenges and opportunities for future development.

When Artificial Intelligence Gets Hilariously Real

The first time I asked an AI to create an image of “a horse riding a man,” I knew I was in for something special. What I didn’t expect was a nightmarish horse-human centaur that looked like it belonged in a museum of modern art dedicated to fever dreams. I couldn’t stop laughing for a solid five minutes.

That’s the thing about AI fails—they’re not just funny (though they absolutely are). They’re actually little windows into how these systems think, or rather, don’t think. They show us teh limitations of technology that many headlines would have us believe is nearly omniscient.

As someone who’s spent countless hours playing with these systems, I’ve collected some truly spectacular fails. They range from mildly amusing to “wake your partner up at 3 AM because you’re cackling too hard to sleep.” Let’s break it down…

The Classic AI Hallucinations (AKA Making Stuff Up With Confidence)

My absolute favorite category of AI fails has to be when they make up information with the unwavering confidence of a toddler explaining how dinosaurs work.

Case in point: I once asked a popular AI assistant for information about a completely fictional book I’d invented on the spot. Not only did it provide me with a detailed synopsis, it offered character analysis, critical reception, and even quoted fictional reviews. It even suggested similar books—all with absolute conviction!

- Why this happens: AI models don’t “know” facts the way humans do. They predict what text should follow your prompt based on patterns they’ve learned from training data.

- What it teaches us: These systems aren’t databases of truth—they’re sophisticated pattern-matching machines that can produce extremely convincing fabrications.

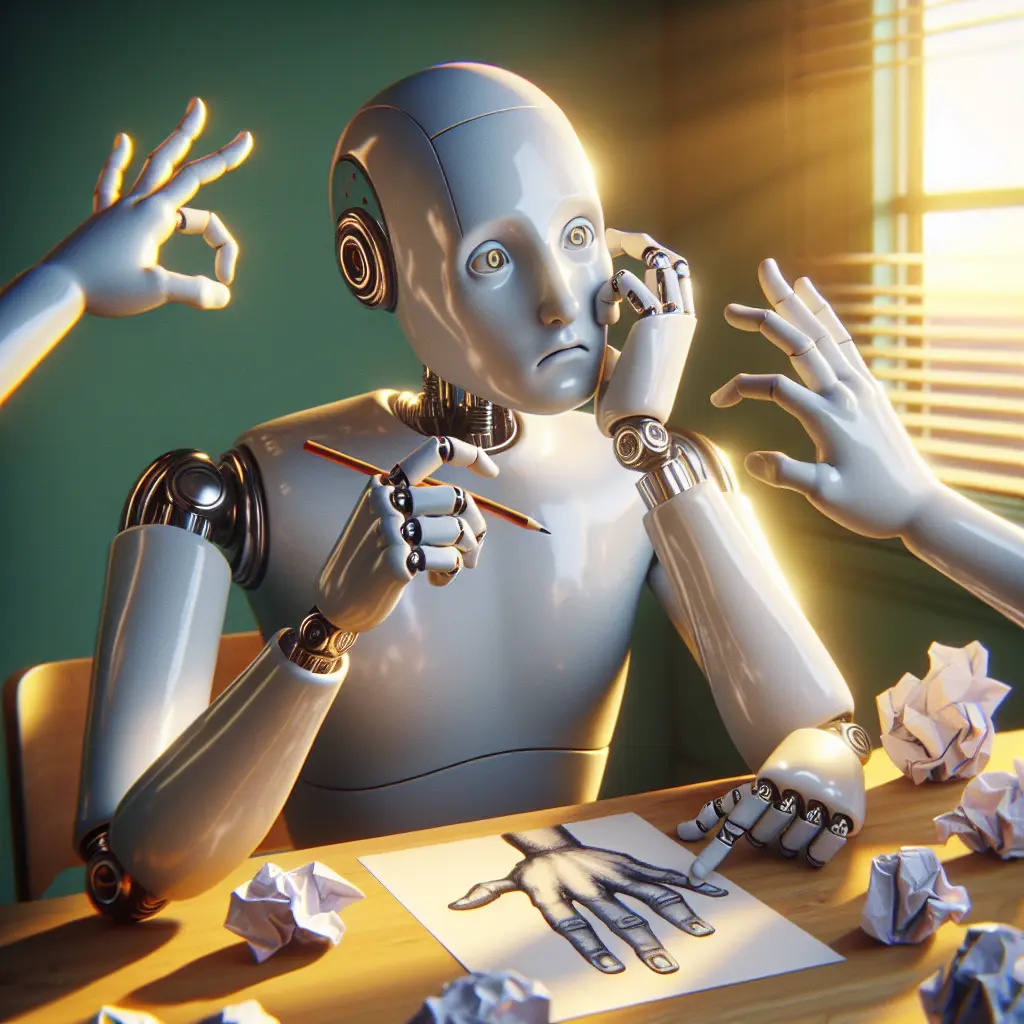

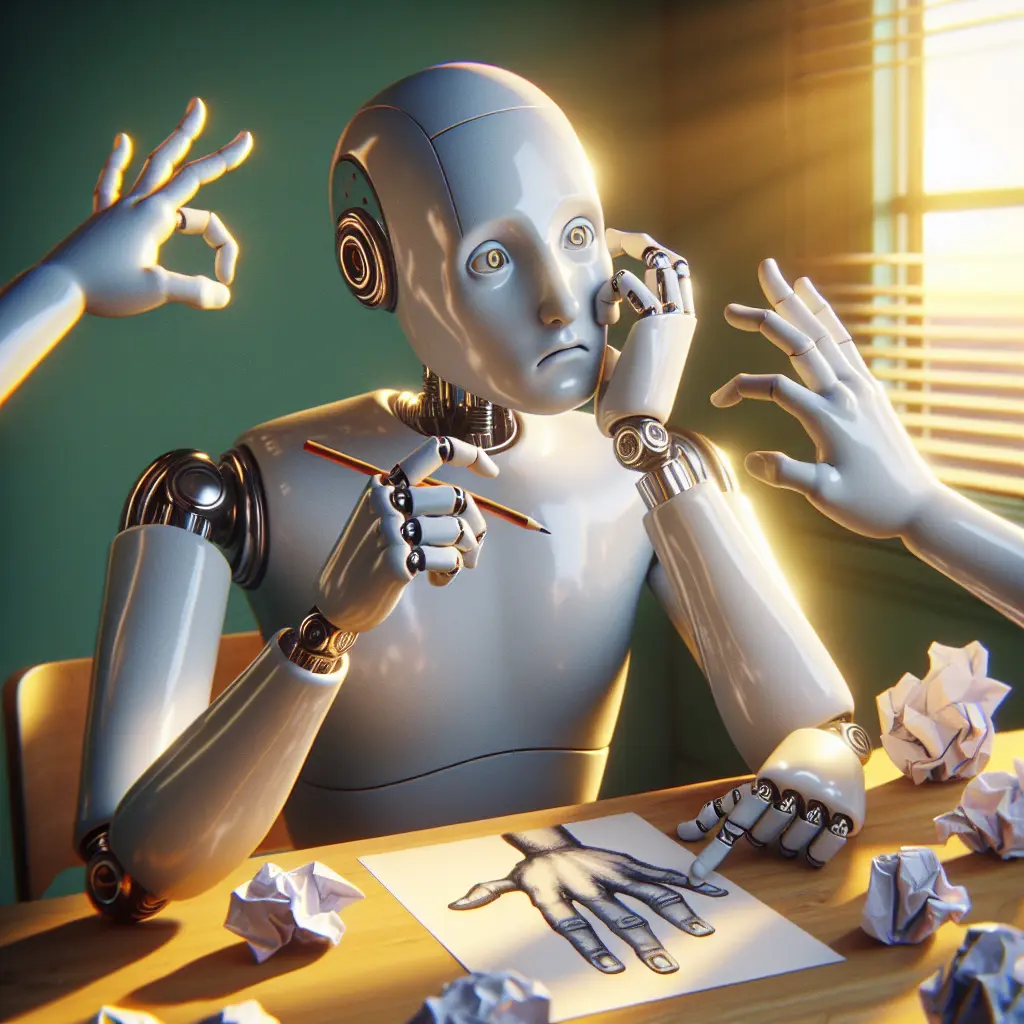

The Uncanny Valley of AI Images

If you’ve played with image generation AI, you’ve probably noticed it has… issues… with certain things. Human hands being the most notorious example. Five fingers? That’s ambitious. How about seven fingers, three thumbs, and what might be a small tentacle?

One time I asked for “a businessman shaking hands with a client” and got back what looked like two aliens exchanging cephalopod appendages while wearing human suits. It was simultaneously hilarious and deeply unsettling.

- Teeth are another AI struggle—often appearing as a uniform white bar or, worse, hundreds of tiny teeth where teeth should not be

- Text in images typically comes out as gibberish that almost looks like real words

- Background elements often melt into surrealist dreamscapes

These visual glitches aren’t just amusing—they reveal how AI “understands” visual concepts differently than humans do. It hasn’t actually learned what hands ARE functionally; it’s just seen lots of pixels in hand-like arrangements.

Mathematical Meltdowns

Despite being built on mathematics, many AI models are surprisingly terrible at actual math. Ask a language model to calculate 17 × 28, and you might get a confident “476!” (It’s actually 476. I just got lucky with that example, which is exactly how AI sometimes gets math right—by accident).

But ask something slightly more complex like “If I have 12 apples and give 3 to each of my 5 friends, how many do I have left?” and you might get “You have 9 apples left!” because it subtracted 3 from 12, completely missing that you gave away 15 apples total.

Lost in Translation

AI translation fails continue to be a goldmine of unintentional comedy. My personal favorite was when I asked an AI to translate a simple English phrase into Japanese, then back to English, repeating this process ten times. By the end, “I enjoy walking my dog in the park on sunny days” had morphed into “The sunshine festival celebrates canine processions through the ancestral grounds.”

Which, honestly, I kinda prefer.

What These Fails Actually Teach Us

Beyond the laughs, these AI failures reveal something important about where we are in the development of artificial intelligence:

- Pattern matching ≠ understanding – AI can recognize patterns without genuinely comprehending what they mean

- Context is everything – Small changes in how you phrase a question can lead to wildly different answers

- Confidence isn’t accuracy – AI often presents incorrect information with absolute certainty

- Human oversight is essential – We still need humans to verify AI outputs, especially for critical applications

A Prompt You Can Use Today

Want to explore some entertaining AI fails yourself? Try this prompt with your favorite AI assistant:

I'd like to play a game to reveal interesting AI limitations. Generate 5 different questions or tasks that you think might confuse your language model abilities. Then try to answer each one, and honestly evaluate where you struggled or might have gotten things wrong.What’s Next for AI?

These fails aren’t just funny—they’re signposts toward the next generations of AI development. Each limitation becomes a research problem to solve, each weird output a puzzle to unravel.

I’m gonna keep collecting these AI fails not just because they make me laugh (though they absolutely do), but because each one tells us something about how these systems work—and don’t work. Maybe someday they’ll stop making these mistakes… but until then, I’ll be here documenting the journey one bizarre hand-rendering at a time.

Frequently Asked Questions

Q: Why do AI chatbots make up information?

AI chatbots don’t actually “know” facts—they predict text based on patterns in their training data. When asked something they don’t know, instead of saying “I don’t know,” they often generate plausible-sounding but completely fabricated responses because they’re designed to provide answers rather than admit ignorance.

Q: Are funny GPT mistakes actually harmful?

While many AI mistakes are harmless and humorous, some can be problematic or harmful, especially when people rely on AI for critical information about health, finance, or safety. Even funny mistakes highlight why we shouldn’t blindly trust AI systems without verification.

Q: How can I spot when AI gets something wrong?

Look for overly confident statements about obscure topics, logical inconsistencies, or information that seems too convenient. For factual claims, always verify with trusted sources. If an AI provides citations, actually check them—they’re often made up or misrepresented.

Conclusion: Embracing the Beautiful Mess

AI failures aren’t just entertaining blunders—they’re valuable insights into both the current limitations and future potential of artificial intelligence. Each weird image, nonsensical answer, or confidently stated falsehood tells us something important about how these systems work underneath their sleek interfaces.

As we continue developing and refining AI technology, these fails serve as both cautionary tales and guideposts for improvement. They remind us that despite impressive capabilities, AI remains a tool created by humans, reflecting our imperfections while striving toward something better.

Enjoyed this roundup of AI’s most facepalm-worthy moments? Share your favorite AI fails in the comments below, or subscribe for more tech insights that don’t take themselves too seriously!